Limiting the nuisance of Bots, Crawlers and Spiders

Bots and crawlers help search engines like Google find and rank your website. But too much activity from these bots can slow down your website’s performance. Here, we’ll discuss how to stop unwanted bots and make your website run faster and more securely.

Bad bots with bad intentions

In addition to the useful search engine bots, there are also "bad bots" that can cause damage. These bots try to find version numbers of popular software such as WordPress or Magento to exploit vulnerabilities. They also search for email addresses to send spam. It is therefore important to protect your website well against these unwanted visitors.

How do you limit the nuisance of bots on your website?

Most search engines regulate the frequency with which they visit your site, but if you’re experiencing problems, you can take action. Here are a few ways you can reduce the impact of bots:

1. Use of robots.txt

You can manage bots with the robots.txt file, which is located in the root of your website. This file tells bots which pages they can and cannot index. Although not all search engines follow the same rules, you can use the Crawl-delay command to slow down the speed of bots such as Bing and Majestic-12. You can set the crawl speed to:

1: Slow

5: Very slowly

10: Extremely slow

To control individual bots, add the following to your robots.txt:

User-agent: MJ12bot

Crawl Delay: 5

For all bots at once you can use the following:

User-agent: *

Crawl delay: 10

2. Manage Googlebot via Search Console

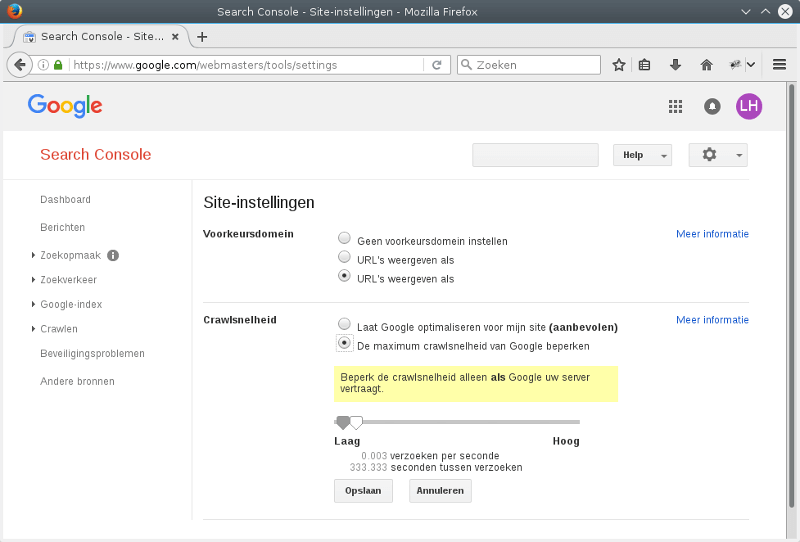

Google's bot respects your robots.txt, but not the Crawl-delay command. However, you can adjust the crawl speed yourself via Google Search Console . If you are not yet familiar with the operation or usefulness of Google Search Console, this is a good place to start: Google Webmasters . Go to Site settings and choose a suitable speed. Do you need help? An SEO specialist can advise you on the correct settings.

3. Protection with WordPress plugins

Are you using WordPress? There are handy plugins available to block bots. Good options include:

These plugins mainly help in blocking bad bots, without affecting search engine indexing.

4. Block bots with Plesk or .htaccess

Do you have a website on a Plesk server? You can block certain bots via the .htaccess file. There is a ready-made script available on GitHub called Bad Bot Blocker .

5. Automatic protection with High Performance hosting

On our High Performance hosting we block many bad bots by default. So you don't have to do anything for this! Our experts are always ready to help you with questions about bots and crawlers.

Want to learn more about blocking bots or have specific questions? Feel free to contact us!